So You Want to Build a Programming Language?

For the past six months, I’ve been on a journey to create a new programming language called Pinecone. It’s not yet a mature language, but it already has enough features to be usable, like variables, functions, and user-defined structs. You can check out the Pinecone homepage or its GitHub repository.

I’m not an expert. When I started this project, I had no idea what I was doing, and I still don’t. I haven’t taken any classes on language creation, read only a little about it online, and didn’t follow much of the advice I was given.

And yet, I still created a brand new language. And it works. So I must be doing something right.

In this post, I’ll dive under the hood and show you the pipeline that Pinecone (and other programming languages) use to turn source code into magic.

Getting Started

“I have absolutely no idea where to start” is something I hear a lot when I tell other developers that I’m writing a language. In case that’s your reaction, I’m now going to go over some initial decisions that are made and steps that are taken when starting any new language.

Compiled vs. Interpreted

There are two main kinds of languages: compiled and interpreted:

- A compiler figures out everything a program will do, turns it into “machine code” (a format that the computer can execute very quickly), and saves it to be run later.

- An interpreter goes through the source code line-by-line, figuring out what it’s doing as it goes.

Technically, any language could be either compiled or interpreted, but one or the other usually makes more sense for a given language. Generally, interpreting tends to be more flexible, while compiling tends to have superior performance. But that’s just the tip of the iceberg of a very complex topic.

I value performance highly, and I noticed a lack of programming languages that were both high-performance and geared towards simplicity, so I went with compiled for Pinecone.

This was an important decision to make early on, because many language design decisions are affected by it (for example, static typing is a huge boon for compiled languages, but not so much for interpreted ones).

Despite Pinecone being designed with compilation in mind, it has a fully-functional interpreter that was the only way to run it for a while. There are a number of reasons for this, which I’ll explain later.

Choosing a Language

I know it’s a bit meta, but a programming language is a program itself, and thus you need to write it in a language. I chose C++ because of its performance and large feature set. Also, I just really enjoy working in C++.

If you’re writing an interpreted language, it makes a lot of sense to write it in a compiled language (like C, C++, or Swift) because the performance lost in your interpreter’s language and the interpreter that’s interpreting your interpreter will add up.

If you plan on compiling, a slower language (like Python or JavaScript) is more acceptable. The compile time might be bad, but in my opinion that’s not as important as a bad runtime.

High-Level Design

A programming language is usually structured as a pipeline. That is, it has several stages. Each stage has data formatted in a specific, well-defined way. It also has functions to transform data from each stage to the next.

The first stage is a string containing the entire input source file. The final stage is something that can be executed. This will all become clear as we go through the Pinecone pipeline step-by-step.

Lexing

The first stage in most programming languages is lexing, or tokenizing. ‘Lex’ is short for lexical analysis, a very fancy word for splitting a bunch of text into tokens. The word ‘tokenizer’ makes a lot more sense, but ‘lexer’ is so fun to say that I use it anyway.

A token is a small unit of a language. A token can be a variable or function name (aka an identifier), an operator, or a number.

The lexer’s job is to take a string containing the source code of an entire file and spit out a list containing every token.

Future stages of the pipeline will not refer to the original source code, so the lexer must produce all the information needed by them. The reason for this relatively strict pipeline format is that the lexer can perform tasks like stripping comments or detecting whether something is a number or an identifier. You want to keep this logic locked inside the lexer, both so you don’t have to think about these rules when writing the rest of the language, and so you can change this kind of syntax in a single place.

Parsing

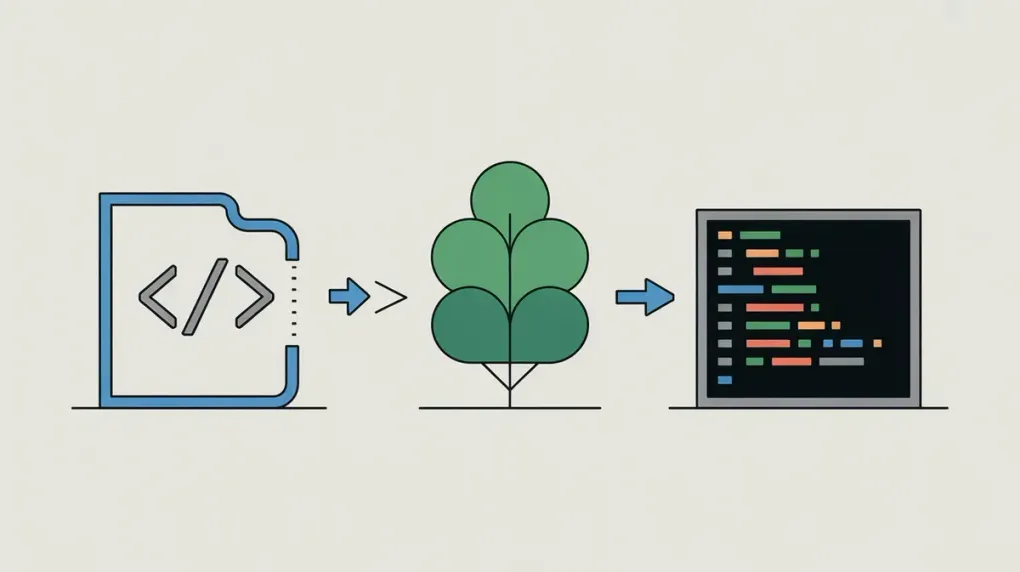

The second stage of the pipeline is the parser. The parser turns a list of tokens into a tree of nodes. A tree used to store this kind of data is known as an Abstract Syntax Tree, or AST. At least in Pinecone, the AST doesn’t have any information about types or which identifiers are which. It is simply structured tokens.

The parser adds structure to the ordered list of tokens that the lexer produces. To avoid ambiguity, the parser must take into account parentheses and the order of operations. Simply parsing operators isn’t terribly difficult, but as more language constructs are added, parsing can become very complex.

Action Tree

Now we leave the area of common, universal terms, or at least I don’t know what the terms are anymore. From what I can gather, what I call the ‘action tree’ is more like LLVM’s IR (intermediate representation).

There is a subtle, but very significant difference between the action tree and the abstract syntax tree. It took me a good while to figure out that there should be a difference between them at all (which contributed to the necessity of parser rewrites).

Simply put, the action tree is the AST with context. This context is information like what type a function returns, or that two places a variable is used are, in fact, using the same variable. Because it needs to figure out and remember all this context, the code that generates the action tree needs a lot of namespace lookup tables and other such things.

Executing the Action Tree

Once we have the action tree, executing the code is easy. Each action node has an ‘execute’ function that takes some input, does what the action is supposed to do (including possibly calling sub-actions), and returns the output of the action. This is the interpreter in action.

Compilation Options

“But wait!”, I hear you say, “isn’t Pinecone supposed to be compiled?” Yes, it is. But compiling is harder than interpreting. There are a few possible approaches.

-

Build my own compiler: This seemed like a good idea at first. I love doing things myself, and I was itching for an excuse to get down and dirty with assembly. Unfortunately, writing a portable compiler isn’t as easy as writing some machine code for each element of the language. Due to the number of architectures and operating systems, it’s impractical for any individual to write a cross-platform compiler backend.

-

LLVM: LLVM is a collection of compiler tools. It’s basically a library that will turn your language into a compiled, executable binary. It seemed like the perfect choice, so I dove right in. Unfortunately, I didn’t check the depth of the water and immediately drowned. LLVM, while not as hard as assembly language, is a gigantic and complex library. It’s not impossible to use, and they have good tutorials, but I realized I’d have to practice a bit before I was ready to fully implement a Pinecone compiler with it.

-

Transpilation: I wanted some kind of compiled Pinecone and I wanted it fast, so I turned to a method I knew could work: transpilation. I wrote a Pinecone-to-C++ transpiler and added the ability to automatically compile the output with GCC. This currently works for almost all Pinecone programs (though there are a few edge cases that break it). It’s not a particularly portable or scalable solution, but it works for now.

The Future

Assuming I continue to develop Pinecone, it will have LLVM compilation support sooner or later. I suspect that no matter how much I work on it, the transpiler will never be completely stable and the benefits of LLVM are numerous. It’s just a matter of when I’ll have the time to do a few example projects in LLVM and get the hang of it.

Until then, the interpreter is great for trivial programs and the C++ transpilation works for most things that need more performance.

Conclusion

I hope I’ve made programming languages a little less mysterious for you. If you want to create one, I highly recommend it. There are a ton of implementation details to figure out, but the outline here should be enough to get you started.

Here’s my high-level advice for getting started (remember, I don’t really know what I’m doing, so take it with a grain of salt):

- When in doubt, go interpreted. Interpreted languages are generally easier to design, build, and learn. I’m not discouraging you from writing a compiled one if you know that’s what you want to do, but if you’re on the fence, I’d go interpreted.

- When it comes to lexers and parsers, do whatever you want. There are valid arguments for and against writing your own. In the end, if you think through your design and implement everything sanely, it doesn’t really matter.

- Learn from the pipeline I ended up using. A lot of trial and error went into designing the pipeline I have now. I tried getting rid of ASTs, ASTs that transform into action trees in-place, and other terrible ideas. This pipeline works, so don’t change it unless you have a really good idea.

- If you don’t have the time or motivation to implement a complex, general-purpose language, try implementing an esoteric language like Brainfuck. These interpreters can be only a few hundred lines.

I have very few regrets when it comes to the development of Pinecone. I made a number of bad choices along the way, but I’ve rewritten most of the code affected by those mistakes.

Right now, Pinecone is in a good enough state that it works well and can be easily improved upon. Writing Pinecone has been an extremely educational and enjoyable experience for me, and it’s only just beginning.