Bit Quantization: The Secret to Efficiency in AI and Games (and No, It's Not Quantum!)

There’s a term in the tech world that sounds incredibly complex and futuristic: quantization. The first time I heard it, my mind immediately jumped to quantum physics, qubits, and the computing of the future. The truth, however, is much more practical, down-to-earth, and absolutely essential for the technology we use today, especially in Artificial Intelligence and game engines.

And no, it has nothing to do with Schrödinger’s cat.

What the Heck is Bit Quantization?

Imagine you have a super-precise thermometer that measures temperature to 30 decimal places (e.g., 23.123456789012345678901234567890 °C). All that precision is amazing, but it takes up a lot of space to store and is slow to process. Now, for most everyday situations, do you really need to know the temperature with that level of detail, or is knowing it’s “approximately 23.1 °C” enough?

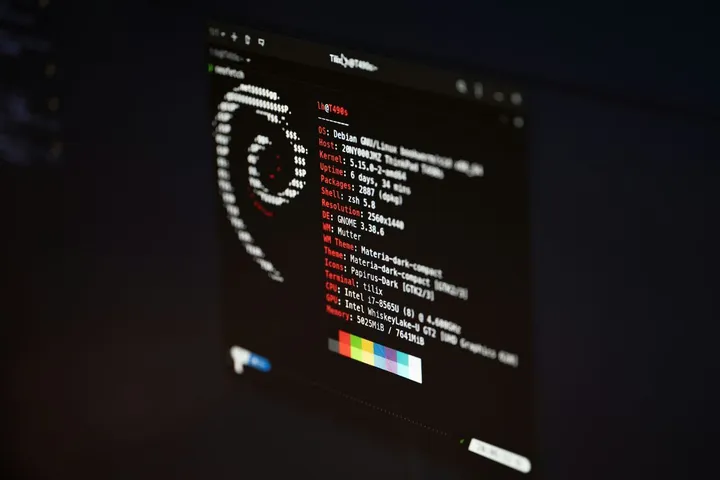

Bit quantization is exactly that. It’s an optimization process that reduces the precision of a number so that it takes up less memory and can be processed faster. Instead of using a 32-bit floating-point number (FP32), which is the standard for high precision, we “flatten” it to a 16-bit version (FP16) or even an 8-bit integer (INT8).

It’s a conscious trade-off: we sacrifice a little bit of precision (which is often imperceptible) to gain a lot of performance.

Why is this a Game-Changer for AI?

Artificial Intelligence models, like the ones that run Gemini, are computational monsters. They have billions of parameters, and each one is a number. Storing and calculating all of this in FP32 requires absurd amounts of processing power and memory (VRAM).

By quantizing these models, developers can:

- Reduce Model Size: A model that used to take up 8 GB of VRAM can now take up 2 GB or less. This means it can run on more modest hardware, like your smartphone or a laptop without a high-end graphics card.

- Speed Up Inference: Calculations with lower-precision numbers are much, much faster for modern processors (CPUs and GPUs). This means the AI can provide an answer almost instantly, instead of taking several seconds.

It’s because of quantization that we now have powerful AIs running locally, without relying 100% on cloud servers.

And in Games?

In game engines, the logic is the same. Think about textures, shaders, animations, physics data. Not everything needs maximum precision all the time. Game developers use quantization to compress this data, resulting in:

- Lower Memory Usage: Allows games to have larger and more detailed worlds without maxing out the memory of your console or PC.

- Faster Loading Times: Less data to load from the disk into memory.

- Better Frame Rates (FPS): The GPU has less work to do processing the data, freeing up resources to render the game more smoothly.

Conclusion: The Magic of Simplicity

So, the next time you’re impressed by the speed of an AI or the vastness of an open world in a game, remember quantization. It’s not quantum technology from the future, but a smart and pragmatic optimization technique. It’s proof that sometimes, to make things work better and faster, the solution isn’t to add complexity, but to intelligently remove it.