The Invisible Tax That Was Slowing Down All of Data Science, and The Hero That Fixed It

Every data scientist knows the feeling. You run a massive query on a distributed engine like Apache Spark, processing terabytes of data. The job finishes in a respectable five minutes. Then, you try to pull that resulting dataset—maybe a few million rows—into your local Python environment to work your magic with Pandas. You watch the progress bar crawl for another ten, fifteen, maybe even twenty minutes. The complex computation was done, so what on earth is the computer doing? Why is the supposedly “simple” act of moving data so painfully slow?

The answer is that your machine was paying a hidden, unnecessary, and deeply inefficient tax: the data serialization tax. And the story of how this tax was abolished involves the creator of Pandas himself deciding to fix a problem that plagued the entire data science world.

The Core Problem: Speaking Different Data Languages

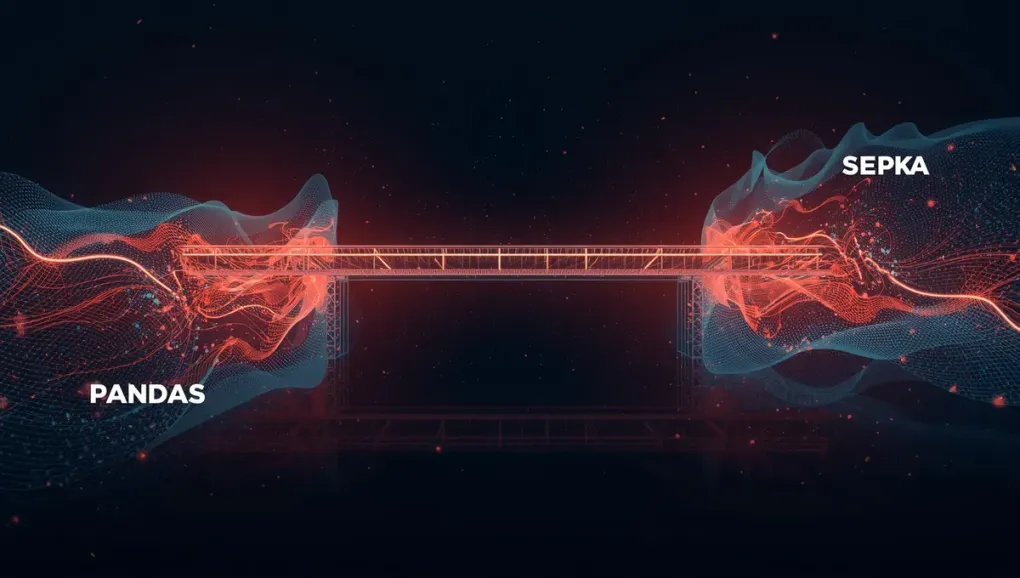

To understand the tax, you have to understand data serialization. Every data processing system has its own internal way of representing a table of data in memory. The Java-based JVM used by Spark has one format. The Python process running Pandas has a completely different one. They don’t speak the same language.

Because of this, moving data between them was a slow, multi-step process:

- Serialize: Spark would take its in-memory data and convert it into a generic, language-agnostic format, like CSV or JSON.

- Transfer: It would send that blob of text over the network to your Python process.

- Deserialize: Your Python process would then have to parse that blob of text and painstakingly reconstruct it into the format that Pandas uses in memory.

This is the tax. The serialization and deserialization steps are computationally expensive. You’re essentially taking a highly optimized, binary data structure, turning it into a dumb, slow-to-parse string format, and then rebuilding it from scratch on the other side. It’s like two people who speak different languages trying to communicate by hiring two separate, slow translators. It works, but it’s a terrible bottleneck.

The Visionary: Wes McKinney and the Birth of Arrow

This problem frustrated Wes McKinney, the creator of the beloved Pandas library. He saw that this serialization tax wasn’t just a minor inconvenience; it was a massive bottleneck holding back the entire data science ecosystem. He realized the problem wasn’t the tools themselves—Spark and Pandas are both brilliant—but the lack of a common language between them.

His vision was simple but profound: What if all these tools could agree on a single, standardized way to represent tabular data in memory? What if, instead of hiring translators, everyone just learned to speak the same language? If that were the case, moving data between systems would be as simple as handing over a pointer to a location in memory. The translation step—the tax—would vanish.

The Solution: Apache Arrow

Apache Arrow is the realization of that vision. It is not a new data processing tool. It is something more fundamental. Arrow is:

-

A specification for a columnar memory format. It defines a standardized, language-independent way to lay out tabular data in memory. The format is “columnar,” meaning data is organized by column rather than by row, which is vastly more efficient for the kinds of analytical queries that data scientists run (e.g., calculating the average of a single column).

-

A set of libraries for most major languages (Python, Java, C++, Rust, etc.) that allow different systems to read and write data in this standardized format.

The magic of Arrow is that it eliminates the serialization/deserialization tax. When you move data from an Arrow-enabled Spark instance to an Arrow-enabled Pandas environment, there is no conversion. Spark hands over a block of memory in the Arrow format, and Pandas can read it instantly, without any changes. The slow, two-step translation process is replaced by a single, near-instantaneous hand-off.

The Impact: A Faster, More Interoperable World

The adoption of Apache Arrow has been a quiet revolution. The impact has been staggering:

- Massive Speed Improvements: Operations that used to take minutes, like

spark.table().toPandas(), now often complete in seconds. - A Thriving Ecosystem: Arrow has become the de facto standard for high-performance data analytics. It has been adopted by dozens of major projects: Spark, Pandas, Dremio, Google BigQuery, and NVIDIA RAPIDS, to name a few. It is the invisible plumbing connecting the modern data stack.

- Enabling New Possibilities: By removing the performance penalty for moving data, Arrow has made it feasible to build tools that seamlessly combine different technologies. You can query with Spark, model with scikit-learn, and visualize with another library, all without paying the serialization tax at each step.

Conclusion: The Power of a Common Language

Apache Arrow is the unsung hero that solved a problem most people didn’t even know they had. It’s a perfect example of a foundational technology that works so well it becomes invisible. It eliminated a frustrating bottleneck and, in doing so, accelerated the pace of innovation across the entire fields of data science and AI.

It serves as a powerful reminder that sometimes the most profound innovations aren’t flashy new algorithms, but the creation of a simple, powerful standard that allows everyone else to work better, faster, and more seamlessly together.